Content systems vs. pattern libraries

Why we're moving to more guidance over boxes

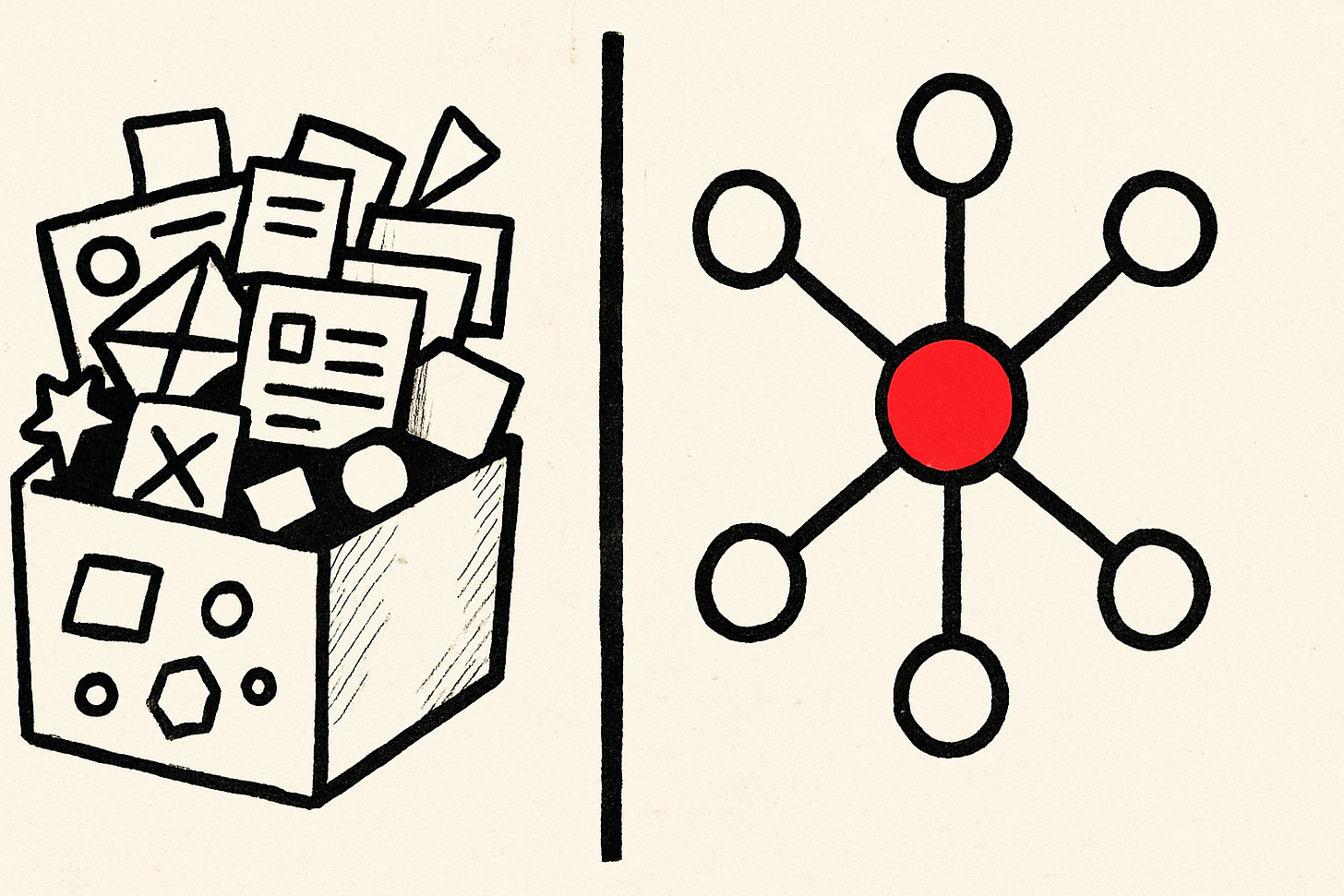

What’s the difference and why does it matter? For starters, some of our content design systems probably look like what it would be to stare into Mary Poppin’s bag.

A Notion page or Google Doc with a “Voice and Tone” section (maybe some adjectives like “friendly, clear, professional”). A collection of microcopy examples showing the “right” way to write error messages, buttons, and empty states. Perhaps some templates for common patterns. Maybe a link to the brand guidelines.

Now open your product design system. You’ll find something entirely different.

There are component anatomies showing how pieces fit together. Accessibility guidelines explaining not just what to do, but why. Responsive behavior documentation. Compositional patterns showing how components combine. Principles for making tradeoff decisions. Token systems that cascade through everything.

One is a collection of examples. The other is a system of thinking.

Guess which one scales? Guess which one helps people make good decisions in novel situations? Guess which one actually works when you’re not in the room?

Content design is stuck at the pattern library phase while product design has moved to systematic thinking. And this gap is costing us.

Why pattern libraries fail at scale

Pattern libraries work great when your team is five people and everyone talks daily. You point at an example, you align on what “good” looks like, and you’re done.

But pattern libraries break down predictably, especially in an enterprise-wide environment or even over time. Lullabot’s 2024 analysis of design systems at scale identified the exact failure modes

They documented component drift, naming problems, content flexibility issues, and governance breakdown—all symptoms of pattern-based rather than systematic thinking.

They don’t handle edge cases. Your example shows a friendly error message for “invalid email,” but what about when the user’s account is locked for suspicious activity? What about when we need to tell them their credit card was declined? The library says “be friendly,” but some situations need authority, not friendliness.

They multiply instead of systematizing. Each new edge case becomes a new pattern. Soon your “system” is 200 examples sorted by component type. Nobody can find anything. As Lullabot (I do quote Jeff’s team a LOT here, don’t I?) observed: “Have you ever run across a component that wasn’t being used correctly? Components are used with the wrong character count or even the wrong content type... This type of misuse comes from content flexibility.”

They don’t explain tradeoffs. When conciseness conflicts with completeness, which wins? When user expectations conflict with brand voice, who decides? The pattern library shows you what to do when everything aligns, but real work happens in the messy middle.

They can’t be interrogated. When someone asks “why did you write it this way?” you can only say “because the pattern library shows an example like this.” You can’t explain the reasoning. You can’t help them understand the principle so they can apply it to new situations.

They don’t work for AI. Anthropic’s engineering team made this crystal clear in their 2024-2025 guidance on “Effective context engineering for AI agents”: “Teams will often stuff a laundry list of edge cases into a prompt in an attempt to articulate every possible rule.” This approach fails. Instead, they recommend “curating a set of diverse, canonical examples that effectively portray the expected behavior.” But even those examples need underlying principles…”examples are the ‘pictures’ worth a thousand words,” but you still need the words.

Most importantly: pattern libraries are prescriptive, not generative. They tell you what to make, but not how to think. And if your system can’t help people think better, it’s just bureaucracy.

The scale problem we can’t ignore

The numbers are damning. Contentful’s 2024 survey of 1,000 C-suite executives found that 80% identified “lack of centralized oversight leading to inconsistent branding” as their top challenge when managing content at scale. And scale matters: 68% of brands say consistency contributed 10-20% of their revenue growth according to a Stanford study cited in the same research.

This isn’t a small problem. 81% of brands struggle to maintain unified global brand identity across diverse markets. Pattern libraries can’t solve this. As Lullabot noted: “A single centralized system can also become a bottleneck. It can take weeks, sometimes months, for new components or changes to trickle down. This can have serious business implications.”

What design systems teach us

Design systems evolved beyond component libraries because designers recognized a crucial truth: consistency comes from shared thinking, not identical outputs.

Nielsen Norman Group’s comprehensive 2024 analysis of content in design systems reveals how mature organizations approach this. They studied systems from Adobe Spectrum, Sainsbury’s, Atlassian, Shopify Polaris, Mailchimp, Salesforce Lightning, and Intuit—all of which have evolved far beyond pattern libraries.

They encode principles, not just patterns. As Nielsen Norman noted: “Unlike UI components in a design system, content standards aren’t followed through mere duplication. Content designers aren’t just reusing the exact phrases or the same formulaic sentence structure. Instead, content standards ensure that every piece of content, though unique, feels part of a cohesive whole.”

They document the why, not just the what. Adobe Spectrum includes not just global content sections but a “design checklist for each UI pattern.” Sainsbury’s includes “design foundations, component guidelines, and an entire section for content standards.” Understanding the reasoning helps teams apply principles to novel situations.

They create decision frameworks. Intuit’s content standards were so comprehensive they lived on a separate site. Not because they were verbose, but because they included decision trees and contextual guidance for complex situations.

They enable composition. Components are designed to work together in predictable ways. You can combine them in novel configurations and trust they’ll behave coherently. The system is generative, not just prescriptive.

They establish boundaries. Design systems clarify what’s in-system (predictable, reusable, supported) and what’s custom (requires design review, unique to context). This makes the system scalable instead of exhaustive.

They make tradeoffs visible. When accessibility conflicts with aesthetics, the system documents how to navigate the tension. When performance conflicts with polish, there’s a framework for deciding. The system helps teams make hard calls, not just easy ones.

This is what systematic thinking looks like. And content design desperately needs it.

What a real content system includes

If we built content design systems the way product teams build design systems, what would they include?

1. Principles with hierarchies

Not just “be clear, be concise, be conversational.” That’s the equivalent of saying “make it pretty.”

Real principles have:

Rationale: Why does this matter? What user problem does it solve?

Hierarchy: When principles conflict, which wins? “Be concise” vs. “be complete” creates tension. How do we resolve it?

Application guidance: What does this look like in practice? How do you know when you’re doing it right?

Content Science’s 2023 research with 125+ content leaders emphasized this need. As Colleen Jones noted: “Organizations [need] to articulate their level of content operations rather often.” Without principle hierarchies, every decision becomes a negotiation.

2. Decision frameworks for common tensions

Content designers navigate tradeoffs constantly. A real system helps them decide:

Conciseness vs. completeness

Use conciseness when: Users are in a flow state and interruptions are costly

Use completeness when: Users are making important decisions and need confidence

Example: Checkout button labels are concise (”Continue”), but financial disclosures are complete

These frameworks become even more critical with AI. As Anthropic’s research shows, you can’t just provide examples—you need “natural progression” from prompt engineering to context engineering, with clear rules for decision-making.

3. Content patterns with anatomy

Not just “here’s a good error message.” Instead:

Pattern name: Error message for action failure When to use: User attempted an action that failed due to system or data issues Anatomy:

What happened (required): Clear statement of current state

Why it happened (contextual): Explanation if it helps user understand or prevent recurrence

What to do (required): Clear next action the user can take

How to get help (contextual): Support option if user is likely blocked

This anatomical approach mirrors what Nielsen Norman found in successful design systems—structure that enables appropriate variation rather than demanding identical implementation.

4. Channel and context guidance

The same content works differently in different contexts. A real system acknowledges this.

As Lullabot observed: “Managing the balance between flexibility and consistency” is a core challenge. Systems need to provide guidance without becoming rigid.

5. AI and automation guidelines

Your system needs to guide AI-generated content. Nielsen Norman Group was explicit: “Establishing content standards is also essential before you can leverage AI-based tools to automate, scale, and expedite the content-design process.”

Anthropic’s guidance goes further, warning about “context rot”—”as the number of tokens in the context window increases, the model’s ability to accurately recall information from that context decreases.” This means your system needs to be:

Prompt engineering principles

Provide role and expertise level

Specify output structure and constraints

Include brand voice through examples and rules, not just adjectives

Test edge cases and failure modes

Human review triggers

Always review: Legal, financial, security-critical content

Spot review: User-facing content that’s generated from novel inputs

Never review: Internal notifications, system logs

Without this, AI becomes a consistency nightmare instead of a scaling solution.

6. Governance and contribution models

How does the system evolve? Who can add to it? What’s the process?

Content Science’s research shows that organizations at higher maturity levels have clear governance models. But as they note, even in 2023, organizations are “needing to articulate their level of content operations rather often”—suggesting that governance remains a challenge.

How AI exposes our lack of systems

The rise of AI-generated content is brutally exposing the gap between pattern libraries and real systems.

When you tell an LLM to “write this in our brand voice,” you get word salad. The model needs actual rules:

“Use active voice” is a rule

“Be friendly” is a vibe

Anthropic’s research articulates this clearly: “Providing examples, otherwise known as few-shot prompting, is a well known best practice... However, teams will often stuff a laundry list of edge cases into a prompt in an attempt to articulate every possible rule.” This doesn’t work. You need systematic principles.

When you try to systematize AI outputs at scale, you discover that your “content guidelines” are actually just tribal knowledge. The unwritten rules were in people’s heads, and now you need to write them down in a way machines can process.

This is actually a gift. AI is forcing content designers to articulate what we’ve always done intuitively. It’s making implicit expertise explicit. It’s revealing where our “systems” are really just vibes.

The teams that figure this out first will have an enormous advantage. They’ll be able to scale content production without sacrificing quality. They’ll be able to maintain coherence across AI-generated, human-written, and hybrid content. They’ll be able to train new team members faster because the system encodes institutional knowledge.

The teams that don’t will drown in inconsistency, quality issues, and endless review cycles. The evidence is clear: 80% of organizations already struggle with consistency at scale. AI will make this exponentially worse without systematic approaches.

From pattern library to content system: A practical path

You can’t rebuild your entire content system overnight. But you can start moving from patterns to systems:

Start with one high-impact tension. Pick a tradeoff your team faces constantly. Document the decision framework. Test it. Refine it. Expand from there.

Make implicit rules explicit. When you review someone’s work and say “this doesn’t feel right,” stop and articulate why. What principle does it violate? What would make it right? Write it down.

Document the why, not just the what. Every pattern in your library should explain its rationale. As Nielsen Norman’s research shows, understanding reasoning is what enables teams to “ensure that every piece of content, though unique, feels part of a cohesive whole.”

Create content patterns with anatomy. Show the structure, not just examples. Help people understand what makes a pattern work so they can adapt it.

Build for AI from the start. Write guidelines that work for both humans and machines. If an LLM can’t follow your guidance, it’s not systematic enough. Remember Anthropic’s advice: work to “curate a set of diverse, canonical examples that effectively portray the expected behavior.”

Test the system’s generativity. Give someone an unfamiliar problem and see if they can use your system to solve it. If they have to ask you, the system isn’t working.

Evolve continuously. The system should change as you learn. Static systems become obsolete. Living systems become valuable.

What systematic thinking enables

When you move from pattern libraries to real systems, you unlock capabilities that seemed impossible:

You can scale without adding headcount. Teams can make good content decisions without routing everything through the content design team.

You can maintain coherence across contexts. Content adapts appropriately to different channels and use cases while staying meaningfully consistent—addressing that 81% of brands struggling with unified identity.

You can onboard people faster. New team members understand the thinking, not just the templates. They contribute meaningfully in weeks instead of months.

You can integrate AI confidently. You have actual rules and principles to guide automated content, not just vibes and examples.

You can evolve your approach systematically. When you discover a better practice, you can update the principle and everything downstream shifts coherently.

You can make your expertise legible. Instead of content designers being mysterious specialists with opinions, they’re systematic thinkers with frameworks. That builds influence.

Most importantly: you stop being the bottleneck. The system scales your thinking instead of requiring your presence. A single centralized system can also become a bottleneck when it doesn’t enable distributed decision-making.

The maturity gap

Product design figured this out. They evolved from pixel-perfect mockups to systematic thinking. They built design systems that encode principles, enable composition, and support decision-making.

Content design is still thought of as passing around Figma files with variables of button labels.

We’re talented, thoughtful practitioners. But we’re operating at the pattern library level while the your project needs systematic thinking. The evidence is overwhelming: 80% of organizations struggle with consistency, 81% can’t maintain brand identity across markets, and AI is about to make this exponentially harder.

The good news: we don’t have to invent this from scratch. Design systems show us the path. Look at how mature organizations approach content systems (we’ll get into that a little deeper). We can adapt their approaches. We can build on their maturity.

The challenge: it requires investment. Building a real content system takes time, rigor, and continuous evolution. It’s not glamorous. It’s not quick. But it’s the work that transforms content design from a craft into a discipline.

As Content Science noted, assessing content operations maturity went from being “a highly useful but infrequent activity” in 2015 to something organizations need “rather often” today. The pressure for systematic approaches is only increasing.

Pattern libraries got us here. Systems will take us forward.

References

Nielsen Norman Group. Content standards in design systems.

Contentful. Controlling content chaos.

Anthropic Engineering Team. Effective context engineering for AI agents.